vSphere with Tanzu

In this post I intend to explore vSphere with Tanzu and why the product is interesting for customers to choose, compared to running Kubernetes in a manual setup maintaining it by your self or choosing another solution to orchestrate the orchestrator Kubernetes, for the delivery of a platform that can serve infrastructure for containerized applications.

What is vSphere with Tanzu?

I get the information from VMware explaining it best.

vSphere with Tanzu is a developer-ready infrastructure, that delivers:

- The fastest way to get started with Kubernetes – get Kubernetes infrastructure in an hour:

- Configure an enterprise-grade Kubernetes infrastructure leveraging your existing networking and storage in as little as an hour *

- Simple, fast, self-service provisioning of Tanzu Kubernetes Grid clusters in just a few minutes

- A seamless developer experience: IT admins can provide developers with self-service access to Kubernetes namespaces and clusters, allowing developers to integrate vSphere with Tanzu with their development process and CI/CD pipelines.

- Kubernetes to the fingertips of millions of IT admins: Kubernetes can be managed through the familiar environment and interface of vSphere. This allows vSphere admins to leverage their existing tooling and skillsets to manage Kubernetes-based applications. Moreover, it provides vSphere admins with the ability to easily grow their skillset in and around the Kubernetes ecosystem.

vSphere with Tanzu Architecture:

You enable vSphere with Tanzu on a VMware vSphere ESXi cluster. This creates a Kubernetes control plane inside the hypervisor layer. This layer contains objects that enable the capability to run Kubernetes workloads within ESXi.

A VMware vSphere ESXi cluster that is enabled for vSphere with Tanzu is called a Supervisor Cluster.

It runs on top of the SDDC layer that consists of ESXi for compute, NSX-T Data Center or vSphere networking, and vSAN or another shared storage solution.

Shared storage is used for persistent volumes for vSphere Pods, VMs running inside the Supervisor Cluster, and pods in a Tanzu Kubernetes cluster. After a Supervisor Cluster is created, as a vSphere administrator you can create namespaces within the Supervisor Cluster that are called Supervisor Namespaces that is reflected in vSphere as a Resource Pool so that IT Ops can control the quota of resources CPU/RAM/Storage a Developer may get.

As a DevOps engineer, you can run workloads consisting of containers running inside vSphere Pods and also create Tanzu Kubernetes clusters.

Difference between vSphere pods Supervisor and Tanzu Kubernetes Clusters, TKG:

The vSphere Supervisor Cluster is Kubernetes setup by vSphere directly on the ESXi hosts. Making the ESXi hosts to be Worker nodes in a Kubernetes Cluster. The enablement of Tanzu on vSphere also creates three Kubernetes control plane VMs in the Cluster for managing the Kubernetes environment.

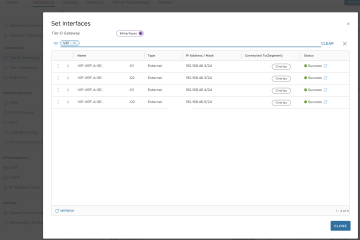

- The three control plane VMs are load balanced as each one of them has its own IP address. Additionally, a floating IP address is assigned to one of the VMs. vSphere DRS determines the exact placement of the control plane VMs on the ESXi hosts and migrates them when needed. vSphere DRS is also integrated with the Kubernetes Scheduler on the control plane VMs, so that DRS determines the placement of vSphere Pods. When as a DevOps engineer you schedule a vSphere Pod, the request goes through the regular Kubernetes workflow then to DRS, which makes the final placement decision.

- Spherelet. An additional process called Spherelet is created on each host. It is a kubelet that is ported natively to ESXi and allows the ESXi host to become part of the Kubernetes cluster.

- Container Runtime Executive (CRX). CRX is similar to a VM from the perspective of Hostd and vCenter Server. CRX includes a paravirtualized Linux kernel that works together with the hypervisor. CRX uses the same hardware virtualization techniques as VMs and it has a VM boundary around it. A direct boot technique is used, which allows the Linux guest of CRX to initiate the main init process without passing through kernel initialization. This allows vSphere Pods to boot nearly as fast as containers.

- The Virtual Machine Service, Cluster API, and VMware Tanzu™ Kubernetes Grid™ Service are modules that run on the Supervisor Cluster and enable the provisioning and management of Tanzu Kubernetes clusters.

TKG, Tanzu Kubernetes Grid:

A Tanzu Kubernetes cluster is a full distribution of the open-source Kubernetes software that is packaged, signed, and supported by VMware. In the context of vSphere with Tanzu, you can use the Tanzu Kubernetes Grid Service to provision Tanzu Kubernetes clusters on the Supervisor Cluster. You can invoke the Tanzu Kubernetes Grid Service API declaratively by using kubectl and a YAML definition.A Tanzu Kubernetes cluster resides in a Supervisor Namespace. You can deploy workloads and services to Tanzu Kubernetes clusters the same way and by using the same tools as you would with standard Kubernetes clusters.

vSphere Supervisor and Tanzu Kubernetes Grid Use Cases:

What to choose when setting up applications/containers in vSphere Supervisor layer or in Tanzu Kubernetes Grid layer is depending on what kind of functionality you as a Developer or IT Operations would like.

Below are example Use Cases when to choose which:

Supervisor Cluster:

Strong Security and Resource Isolation

Performance Advantages

Serverless Experience

Tanzu Kubernetes Cluster:

Cluster Level Tenancy Model

Fully Conformant to Upstream k8s

Configurable k8s Control Plane

Flexible Lifecycle, including upgrades

Install and customize favorite tools easily

So lets say I am a Developer that would like to utilize the Supervisor Cluster as a Production and the Tanzu Kubernetes Grid as a Development area for my application:

Let’s go into vSphere first and check out the UI:

I’m logged in as the Developer now and I can see my namespace called tkg01 created by the IT Operations team.

I have also created a Tanzu Kubernetes Grid Cluster for my development testing and to be able to myself lifecycle manage the cluster.

What IP/DNS me as a developer should run my kubectl commands against is received by checking out the Link to CLI Tools in the picture:

Starting my Ubuntu with kubectl installed: I login against the vSphere Supervisor cluster:

To see which Kubernetes versions IT Operations has made available you run the command: kubectl get tanzukubernetesreleases

I have created a yaml file containing the specifications for setting up a TKG cluster:

In the file I specify a kind of type TanzuKubernetesCluster

the file also contains the name, namespace, version of Kubernetes and number of controlPlane and worker nodes I want.

apiVersion: run.tanzu.vmware.com/v1alpha1 #TKGS API endpoint

kind: TanzuKubernetesCluster #required parameter

metadata:

name: tkg01-cl02 #cluster name, user defined

namespace: tkg01 #vsphere namespace

spec:

distribution:

version: 1.20.2 #Resolves to the latest v1.18 image

topology:

controlPlane:

count: 1 #number of control plane nodes

class: best-effort-small #vmclass for control plane nodes

storageClass: tanzu-storage-policy #storageclass for control plane

workers:

count: 3 #number of worker nodes

class: best-effort-small #vmclass for worker nodes

storageClass: tanzu-storage-policy #storageclass for worker nodesTo setup a new Kubernetes cluster it is as easy as running

ubuntu@tanzu-cli:~/Tanzu$ kubectl apply -f tkg-cluster.yaml

tanzukubernetescluster.run.tanzu.vmware.com/tkg01-cl02 created

We see in vSphere the cluster has been created

Now let’s create a deployment and expose it to the world:

I have a simple nginx web application:

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-test

run: nginx

name: nginx-test

spec:

replicas: 2

selector:

matchLabels:

app: nginx-test

template:

metadata:

labels:

app: nginx-test

spec:

containers:

- image: nginx:latest

name: nginxand a service of type LoadBalancer:

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: nginx-test

run: nginx

name: nginx-test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx-test

type: LoadBalancer

status:

loadBalancer: {}I first login to my newly created TKG cluster tkg01-cl02 to deploy my test application in my development area:

We see that we are logged in and that the controlplance node and worker nodes are Ready and on version 1.20.2 as I stated before in my TKG cluster yaml file.

Before we can deploy the application we need to set the permission for our authenticated user:

The following kubectl command creates a ClusterRoleBinding that grants access to authenticated users run a privileged set of workloads using the default PSP vmware-system-privileged.

kubectl create clusterrolebinding default-tkg-admin-privileged-binding --clusterrole=psp:vmware-system-privileged --group=system:authenticatedNow let’s create the deployment and service: Since I have NSX-T integrated with vSphere 7 and enabled during the Workload enablement of Tanzu. NSX-T will create a Loadbalancer and expose it to the world on IP network 10.30.150.0/24.

We get the services in the cluster and se that we have an External IP set on the LoadBalancer for nginx-test

ubuntu@tanzu-cli:~/Tanzu/nginx$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23m

nginx-test LoadBalancer 10.98.212.238 10.30.150.6 80:31971/TCP 6s

supervisor ClusterIP None <none> 6443/TCP 23mLet’s use a browser and look what we got:

Nice! We have our Web up and running in our Development TKG Tanzu Cluster 02.

Now let’s say we are satisfied with our development and would like to create the deployment in production. This is supposed to be placed on the SuperVisor Cluster in vSphere. Logging into this is performed with the following command:

kubectl vsphere login -u a_jimmy@int.rtsvl.se --server=10.30.150.1Let’s get the nodes: We see the ESXi hosts as the workers and we have 3 Supervisor Control VMs.

Let’s deploy the application in production:

Now we see if the pods are running, if the service is created and the External IP is set to 10.30.150.8

Lastly let’s check with a browser again:

Awesome we have our Frontend Web up and running in production on the Supervisor Cluster.

Looking in vSphere we also see the vSphere PODs created for the deployment.

I am excited about how Tanzu and vSphere is always improving to help me and my customers work in the hybrid cloud. I will continue posting new technical and product information about Tanzu.

Join me by following my blog directly using

Thank you.

0 Comments