AVI – NSX Advanced Load Balancer for Telco Cloud Platform with Tanzu Part 1

This is Part 1 in a long blog post is meant to show step by step instructions on how to configure AVI NSX Advanced Load Balancer for Telco Cloud Platform by utilizing BGP Peering between ALB and NSX-T T0:s over Overlay Segments to expose Kubernetes services for ingress traffic against Telco CNF, Containerized Network Functions.

What’s Telco Cloud Platform?

Content

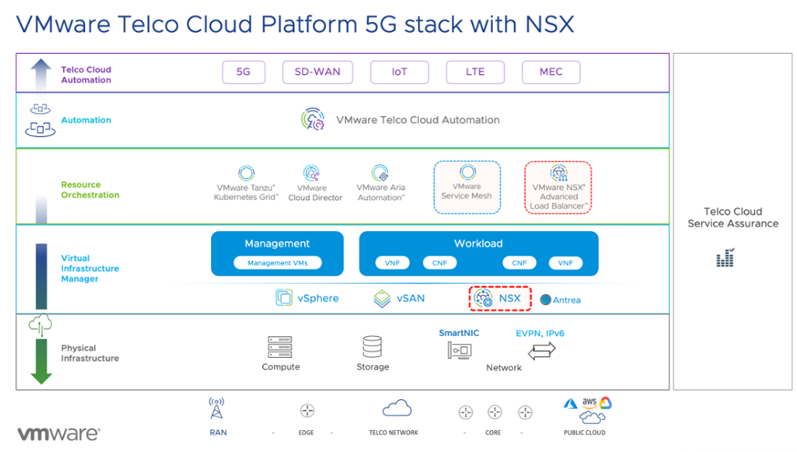

VMware Telco Cloud Platform – is a cloud-native platform powered by VMware Telco Cloud Infrastructure coupled with automation, providing a cloud-first approach that delivers operational agility for virtual, cloud-native 5G, and Edge network functions. The platform is based on VMware products like vSphere, NSX-T, Tanzu Kubernetes Grid (TKG), Avi NSX Advanced Load Balancer and more. Link to full description of all the components and the architecture how TCP is design can be found here.

ALB in TCP with Tanzu

To increase flexibility, simplify management and reduce costs, VMware Telco Cloud Platform offers a unified, dependable, and marketable solution to deploy network functions from multiple vendors. The integration of VMware NSX Advanced Load Balancer enables the management of incoming network function traffic and provides carrier grade application delivery services.

The Avi Vantage platform provides a centrally managed, dynamic pool of load balancing resources on commodity x86 servers, VMs or containers, to deliver granular services close to individual applications. This allows network services to scale near infinitely without the added complexity of managing hundreds of disparate appliances.

When Telco CSP works with vendors and applications there are a lot of requirements that go into each project. Requirements for network segmentation for each application are specific and need to accommodate the demands for each application.

When we start to look at Networks for VNFs and CNFs a requirement regarding the demand to have dedicated VRFs for each application or an application that need access to multiple different VRFs is something that needs to be solved.

When we look at VNFs or VMs that is quite simple since that means we usually have multiple vNICS for each VM going to different VRFs. When we start to look at CNFs or Containerized Network Functions, that becomes more complicated.

Since the applications now are containerized, we need a way to expose services against the pods in the Container cluster and also have these exposed services on different VRFs and perhaps also when having this on multiple Regions or Availability Zones it gets even more complex.

Here is where AVI NSX Advanced Load Balancer comes in for the rescue.

Design and Implementation of AVI ALB with TCP and Tanzu

The design and implementation of AVI ALB consists of creating AVI SEs, Service Engines that will be setup by the Managementplane AVI ALB Controllers.

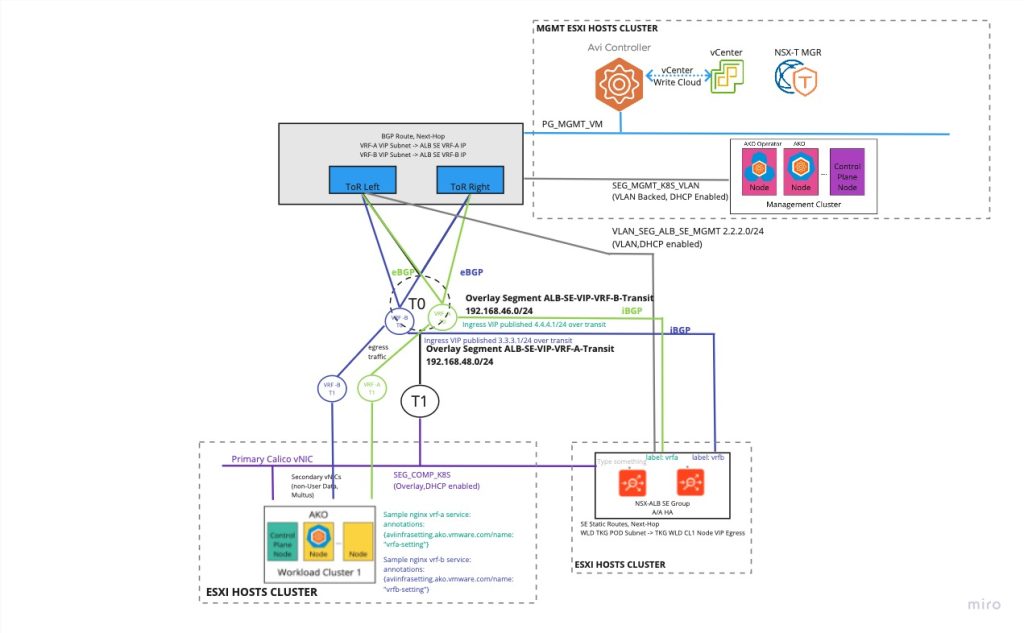

Inorder for us to advertise a VIP, Virtual IP up against our physical environment with different VRFs we have them connected against NSX-T T0 VRF routers. The request against each service for the applications are then forwarded against our backend underlaying Tanzu Kubernetes Pods.

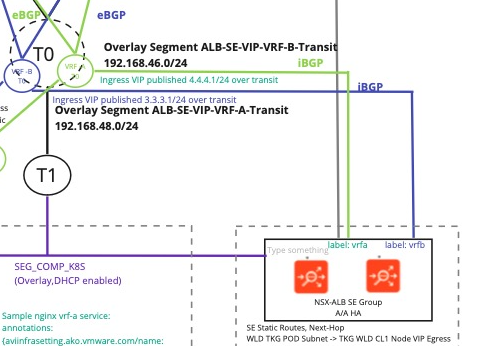

So if we look at the following logical design for AVI ALB with Tanzu for TCP. We have some Tanzu clusters setup with multiple VRFs going to their respective NSX-T T0 VRF gateway. We want to make sure that traffic against each pod in the Tanzu cluster go in the correct direction, VRF. We can accomplish this by using Labels and Annotations in Tanzu TKG followed with utilizing Multus CNI and Multus network attachment definitions that tells pods where to route their traffic.

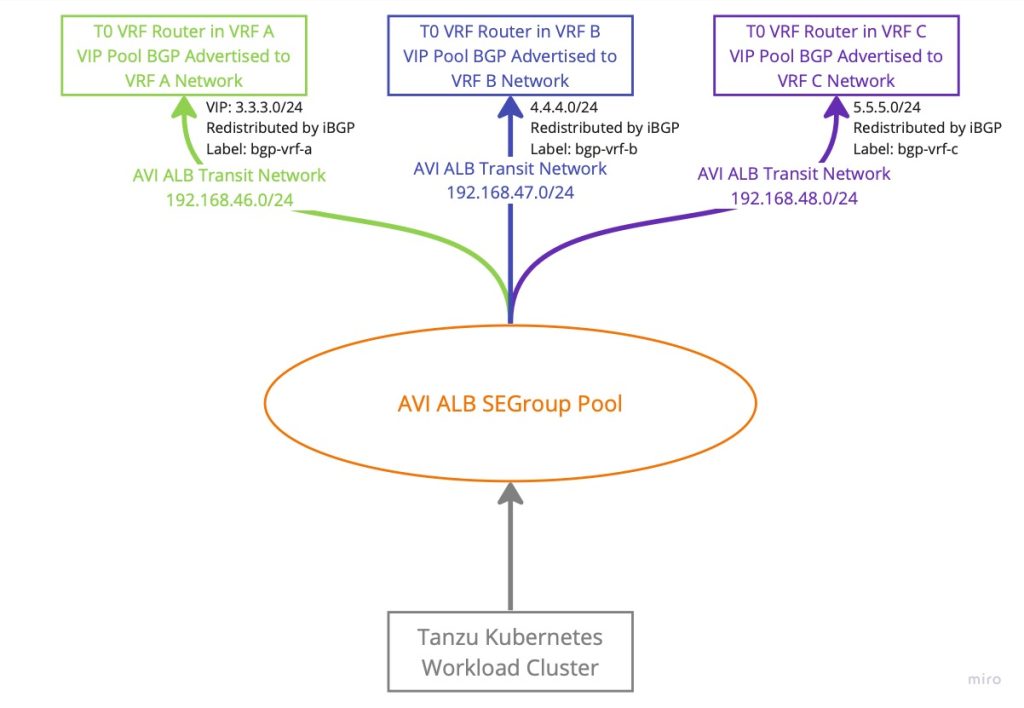

Looking at the below picture we have a conceptual design on how separation between networks can be setup with Avi ALB, to be able to use labels that we advertise our traffic for our services against different BGP Peers that in turn connects against a specific VRF.

By using labels for BGP we can select which VRF you want to advertise the CNFs in the cluster.

There is a 1:1 relationship between te BGP peer and the label set on the BGP in Avi ALB. So by using labels on BGP we can get a way to advertise on what VRF we want to send traffic for a CNF.

Avi ALB Configuration with vSphere and NSX-T

ALB – vCenter Cloud connection

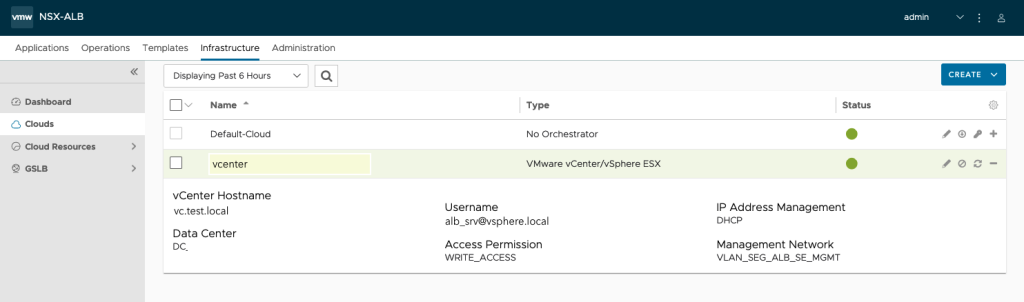

Inorder to setup AVI to be used with Tanzu and the TCP Stack we need to connect the AVI Controllers against vSphere (in Write-cloud mode). Link on how that can be done is here

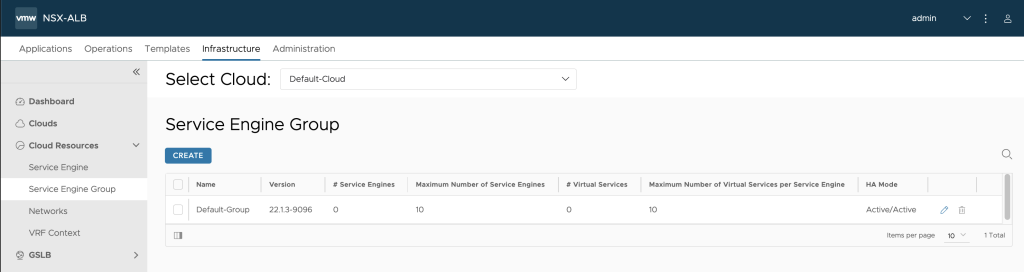

SE Group creation

Next up we head over to the Infrastructure -> Cloud Resources -> Service Engine Group section.

Here we setup a SE-Group we later can utilize for one of our Tanzu clusters. The SE Group is basically a Template on how we would like our SEs in the group to be should be sized, placed, and made highly available when they are deployed.

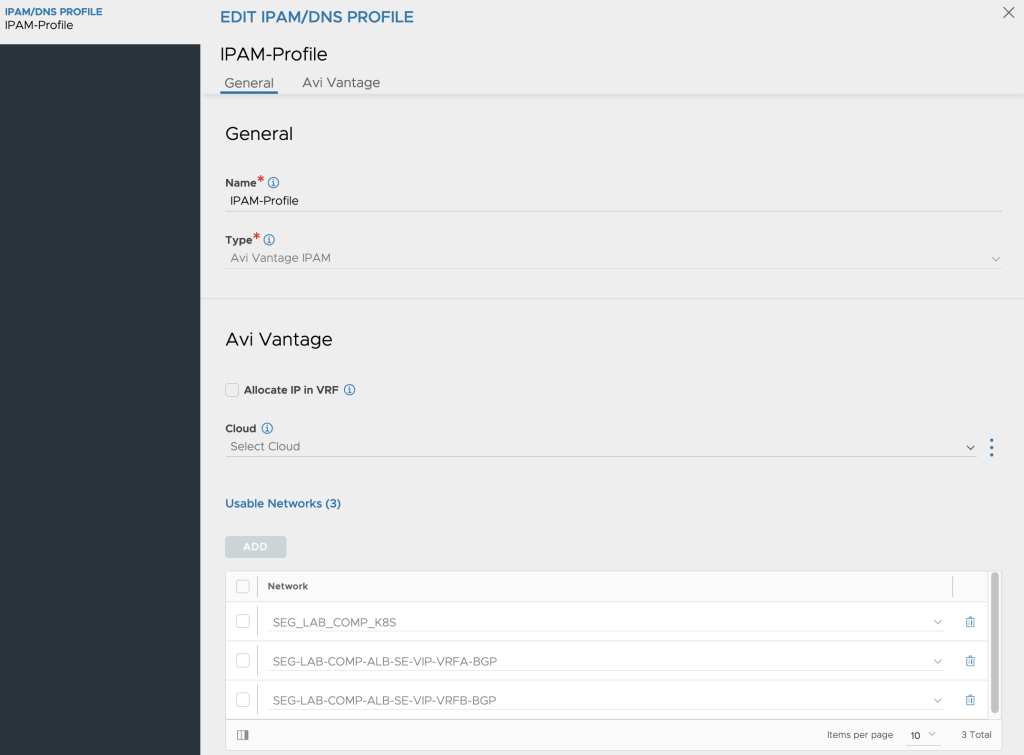

IPAM and DNS Profile

We also setup an IPAM inorder to have the ability to configure all the networks that should be usable for allocating IPs for the Service Engines placed on the workload backen network, allocating the VIPs for Kubernetes services and also for advertising these VIPs against our BGP VRF peer routers.

In the picture below I have made sure to make the Tanzu workload network and two VIP BGP networks usable.

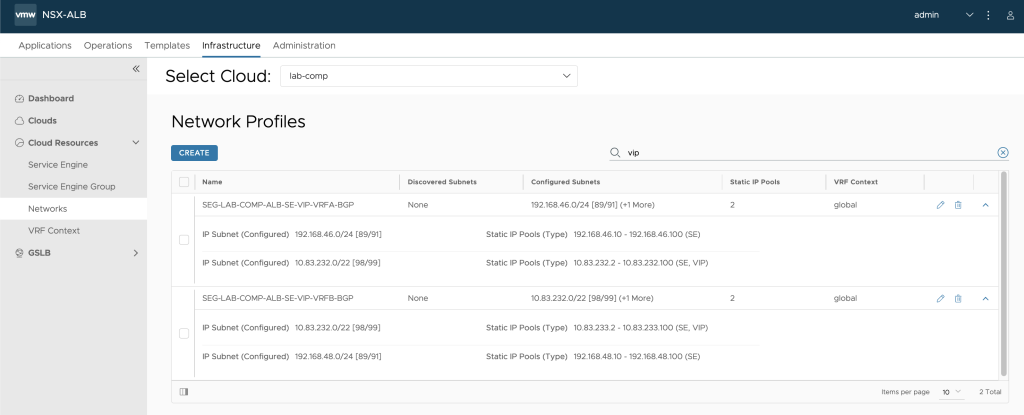

Configure network Segments

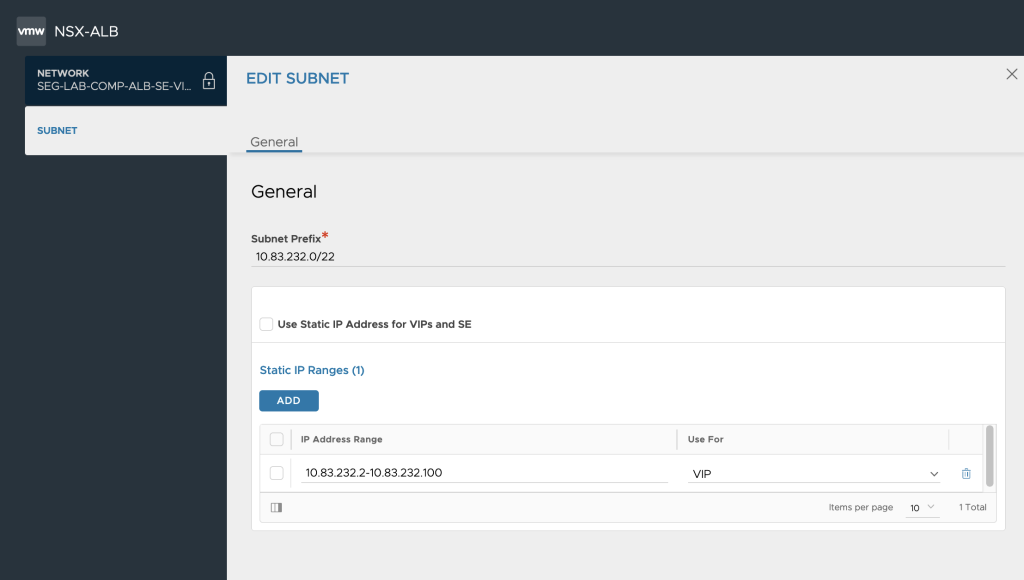

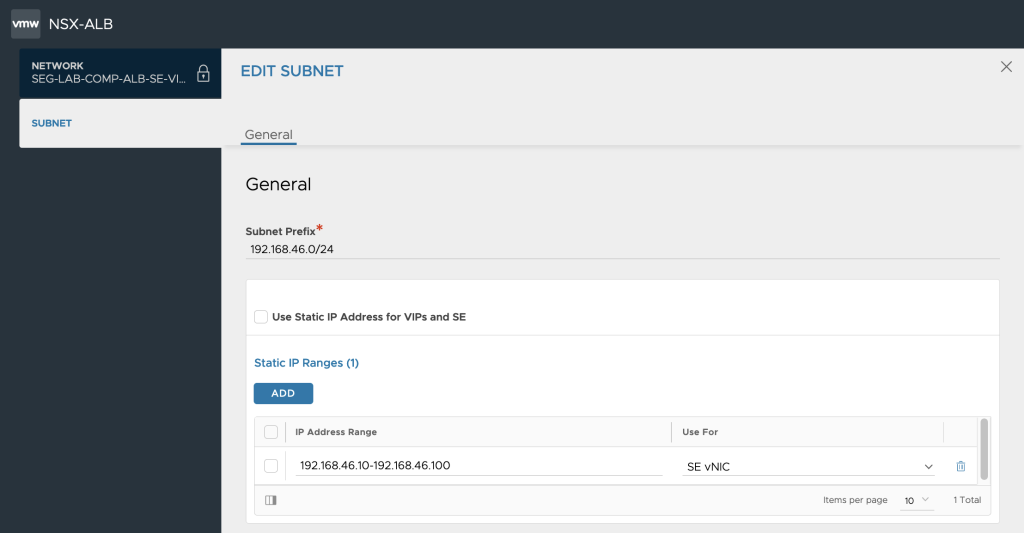

In this next bit we configure the network segments that we will use for VIPs and Service Engines.

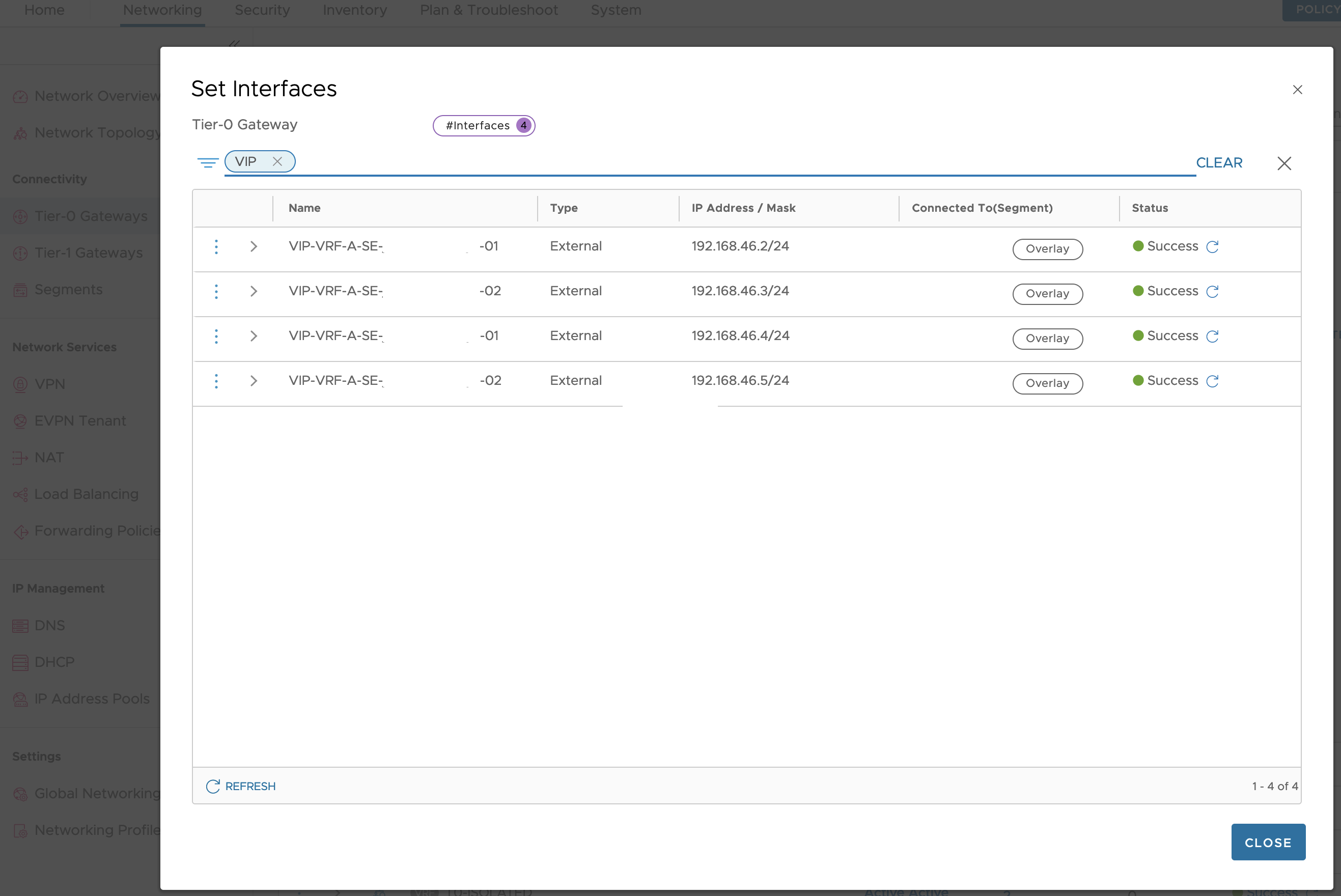

We can here declare the AVI ALB Transit Network subnet along with the VIP subnet that will be BGP routed between AVI ALB SEs and the NSX-T T0 VRFs.

A thing to notice here is that the IP range provided for the SEs in the pool need to be preconfigured as BGP peers in the NSX-T T0 BGP side configuration, since NSX-T does not support dynamic BGP peering at this point in time.

ALB BGP Peer Setup

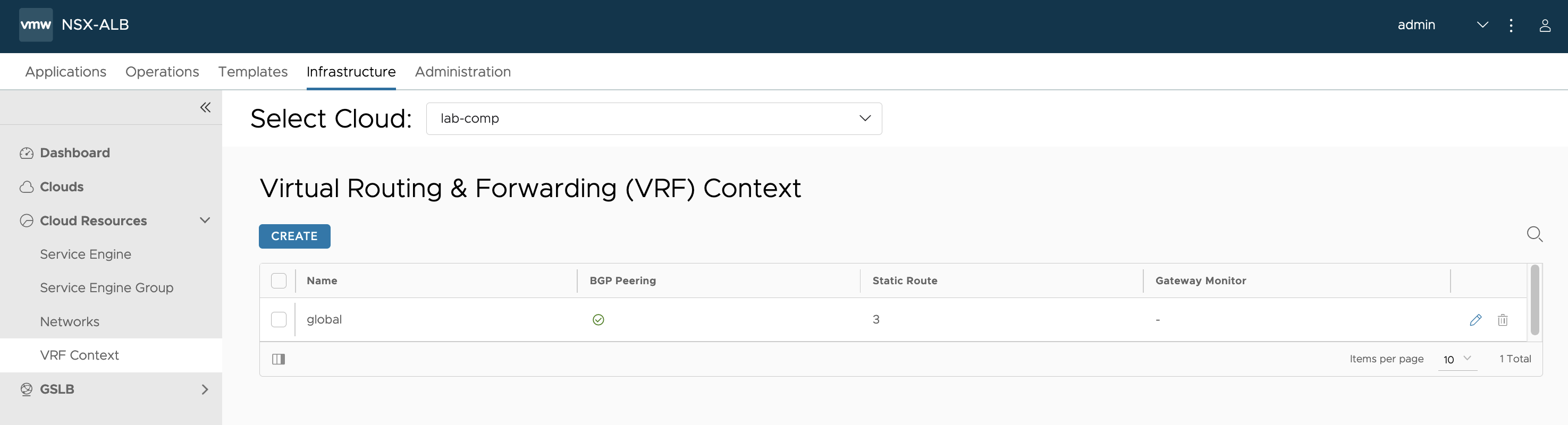

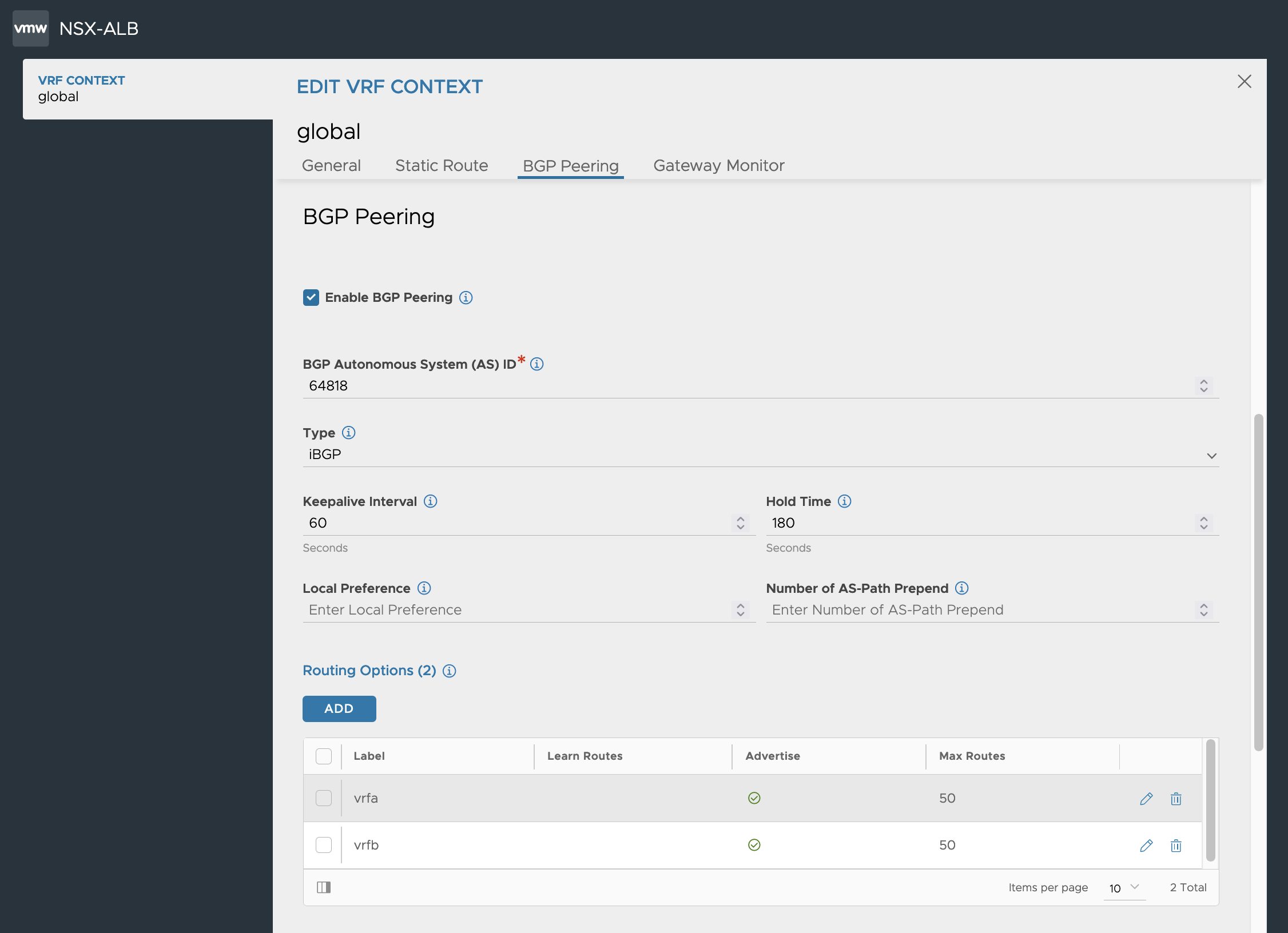

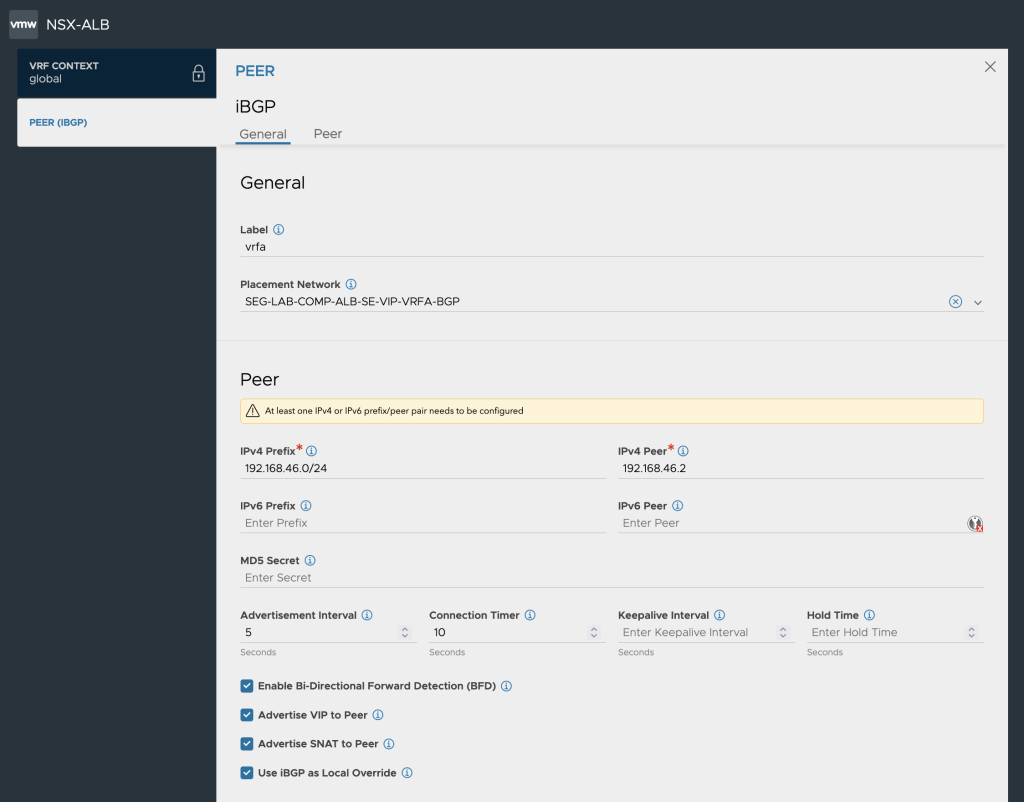

To setup BGP Peers with specific labels in ALB we head over to Infrastructure -> VRF Context -> global

Here we can recreate the BGP with its specific label to be used later to steer traffic against the correct BGP Peer.

We Enable BGP Peering and set it as iBGP and match the BGP AS ID as it is set in NSX-T on the T0 VRF BGP setting.

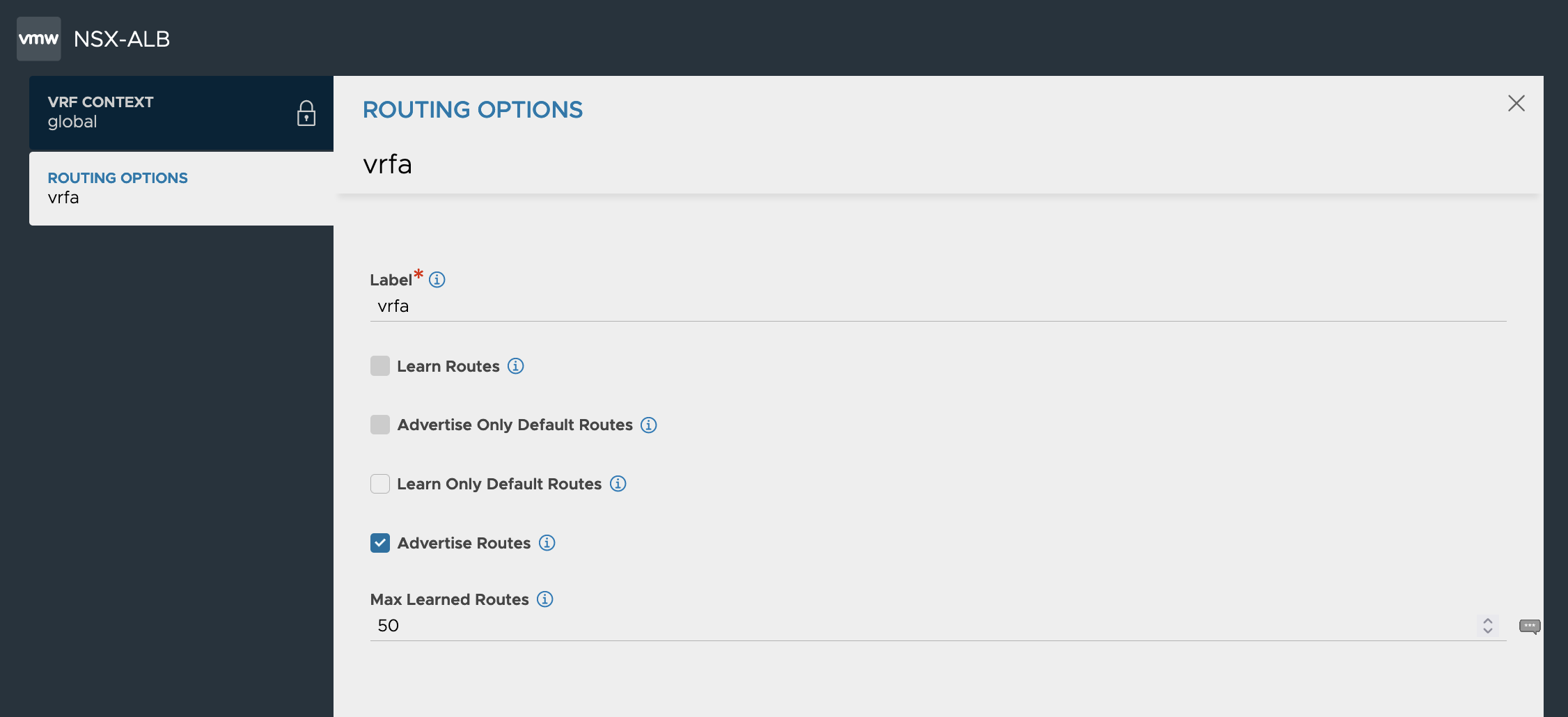

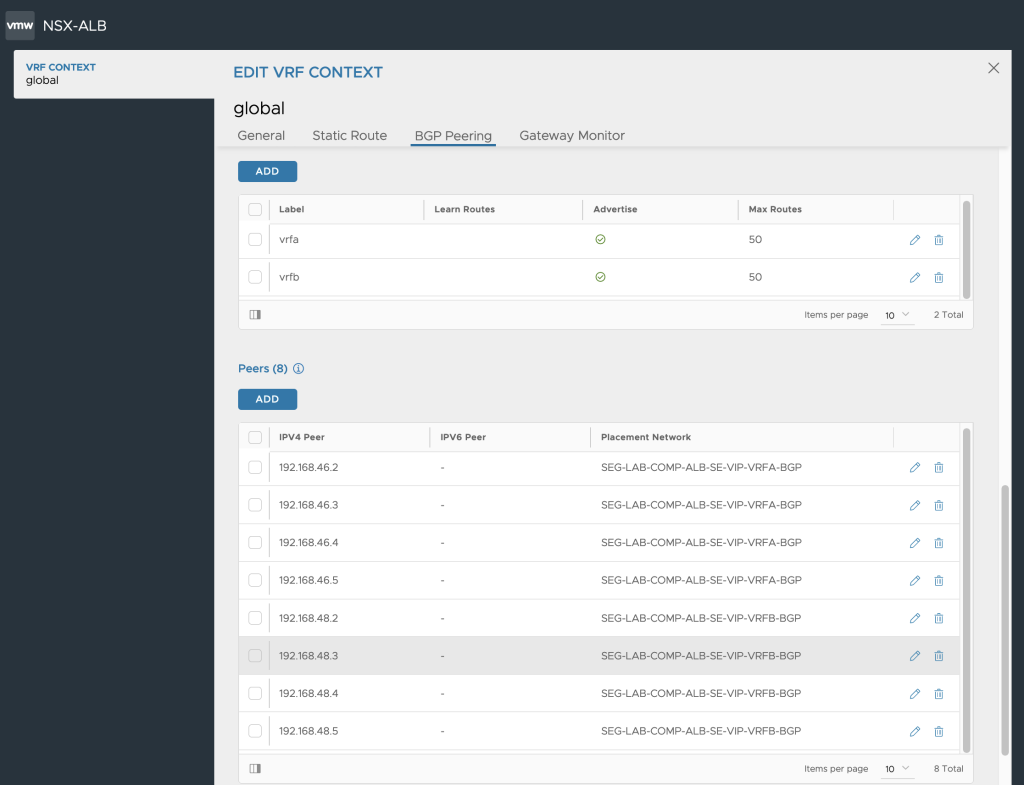

ALB BGP Peers Labeling

We create BGP Peers and set a label (vrfa, vrfb)on each peer going up against the T0 VRF. Since in my deployment I have 4 Edge Nodes where my T0 VRFs are instantiated on we need to have one BGP Peer for each T0 uplink/edge. In this case we will have 8 peers. 4 for each BGP VRF. We also make sure to Advertise VIP to Peer and Use iBGP as Local Override.

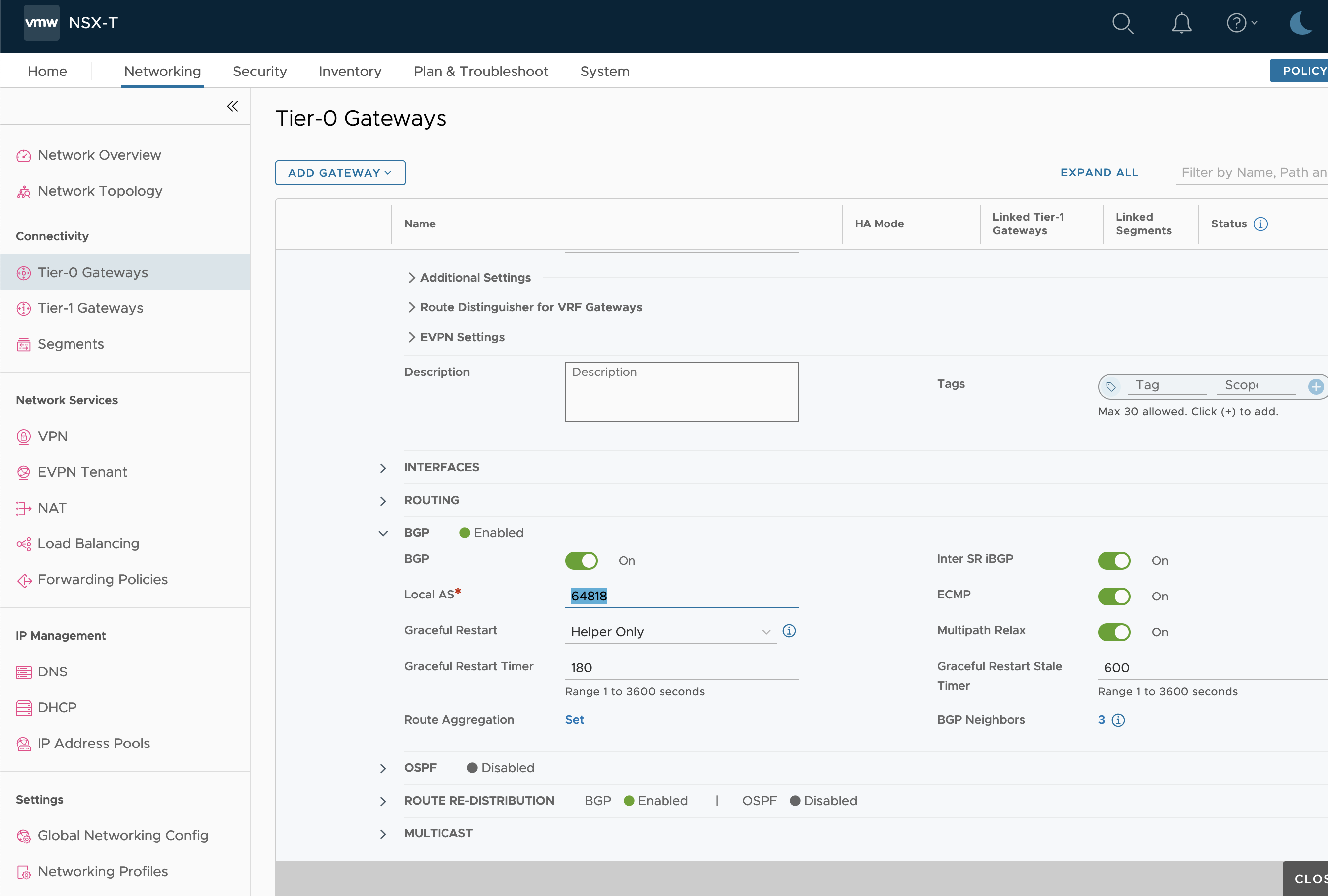

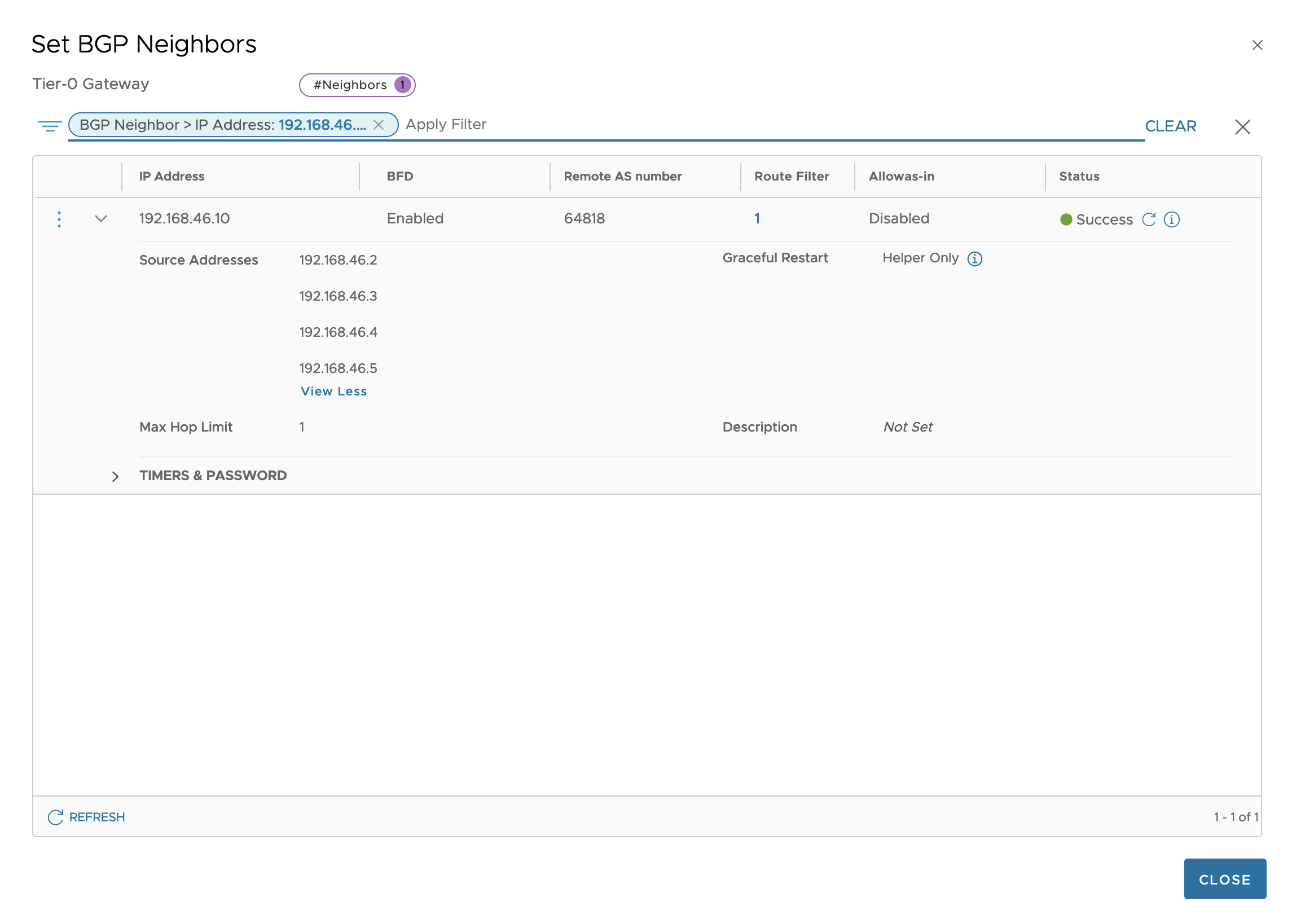

NSX-T BGP Peer Configuration

In this part of the setup we head over to NSX-T that is responsible for setting up the Segments created for the Tanzu WLD clusters, and also for the VRF T0 gateways BGP. In NSX we want to create the receiving gateways end of the BGP Transit Network for ALB to be able to redistribute the VIPs that will be created by Kubernetes services.

The ALB Transit network for the VRFs that connect NSX-T T0 VRFs with the Service Engines we setup as one interface per edge on the T0 VRF. Later we create the BGP neighbor for the uplinks against the SE transit peer. the 192.168.46.10 is the BGP Peer in ALB as set in the Network Profile span earlier when we configured the network segments

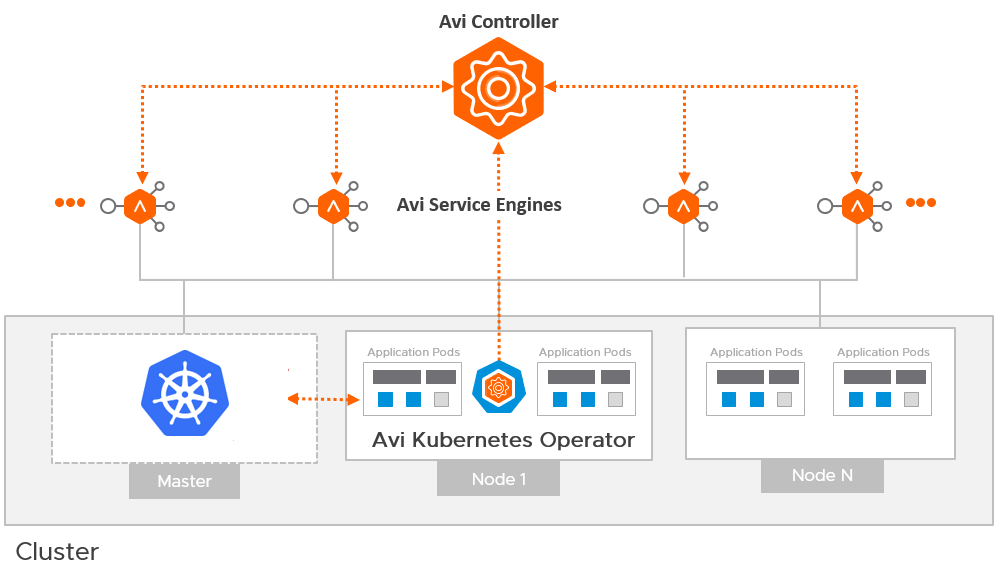

At this stage the BGP setup is complete, but we will not get it Established BGP Session, until we actually have started to deploy a service in Tanzu och published it as a Load Balancer. When a Loadbalancer is requested as a service in Tanzu, it will talk with the Ingress Controller provided by AVI AKO Avi Kubernetes Operator.

AKO is a Kubernetes operator which works as an ingress controller and performs Avi-specific functions in a Kubernetes environment with the Avi Controller. It runs as a pod in the cluster and translates the required Kubernetes objects to Avi objects and automates the implementation of ingresses/routes/services on the Service Engines (SE) via the Avi Controller.

So what we need now is to head over to TCA, Telco Cloud Automation and start onboarding AKO as a CNF, Container Network Function with a CSAR. This is created in the CSAR Design Board in TCA.

Later after that has been done we can start onboarding a sample application that need a Load Balancer that has an annotation set inorder for the correct VIP on the correct VRF to be created. and then we will get an Established BGP session.

That will be part 2 in this blog series.

0 Comments