Fix – Telco Cloud Automation by VMware Broadcom, Containerd service will not start again after resize of disks

I recently had a case with a customer where we resized the disks for Telco Cloud Automation, and after a reboot for some reason the TCA services would fail to start again.

After a lot of troubleshooting, we came to the conclusion that there was something wrong with containerd not starting correctly.

This post is a workaround on how to get containerd started again.

The Error we saw was when running journalctl -xfe, there was a lot of noice coming from containerd not being able to run CRI service. Below is an example of the error:

1. Collect the logs for Containerd

journalctl -xe --unit containerd -f Dec 10 13:06:13 tcamgr.catbird.local containerd[8635]: time="2024-12-10T13:06:13.836586150Z" level=fatal msg="Failed to run CRI service" error="failed to recover state: failed to reserve container name \"tca-database-admin-service_tca-database-admin-service-6cd59cf46f-thzt6_tca-mgr_79f08f9b-790e-471a-90ff-b663ecbb94a3_9\": name \"tca-database-admin-service_tca-database-admin-service-6cd59cf46f-thzt6_tca-mgr_79f08f9b-790e-471a-90ff-b663ecbb94a3_9\" is reserved for \"131388c0f5dfd2c73c35650c148b54148a3df321c38b6eeebd9c36f8ee534554\""

As we can see in the error message it says it is beeing blocked from starting any containers since it is already reserved to some other id.

So to fix this go to the following location and edit the config.toml file:

On the top row edit the disabled_plugins section and add the cri name: io.containerd.grpc.v1.cri

vi /etc/containerd/config.toml

[ /home/admin ]# cat /etc/containerd/config.toml

disabled_plugins = ["io.containerd.grpc.v1.cri"]

#disabled_plugins = []

imports = []

oom_score = 0

plugin_dir = ""

required_plugins = []

root = "/var/lib/containerd"

state = "/run/containerd"

temp = ""

version = 2

[cgroup]

path = ""

[debug]

address = ""

format = ""

gid = 0

level = ""

uid = 0

[grpc]

address = "/run/containerd/containerd.sock"

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

tcp_address = ""

tcp_tls_ca = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

[metrics]

address = ""

grpc_histogram = false

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

deletion_threshold = 0

mutation_threshold = 100

pause_threshold = 0.02

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

device_ownership_from_security_context = false

disable_apparmor = true

3. Restart the containerd service

systemctl stop containerd.service

systemctl start containerd.service

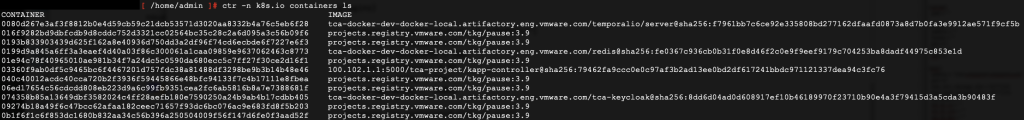

4. List the containers in containerd

ctr -n k8s.io containers ls

5. Delete all containers in containerd

ctr -n k8s.io c rm $(ctr -n k8s.io c ls -q

6. Stop Containerd service and reedit the config.toml file and change back the plugin value to the default.

vi /etc/containerd/config.toml [ /home/admin ]# cat /etc/containerd/config.toml disabled_plugins = [] imports = [] oom_score = 0 plugin_dir = "" required_plugins = [] root = "/var/lib/containerd" state = "/run/containerd" temp = "" version = 2

7. Start Containerd and then see that all pods will start to come back after some time.

systemctl start containerd.service ctr -n k8s.io containers ls

Best is to now reboot the TCA Manager completely to have all related services started in the correct order.

0 Comments